2021. 5. 31. 19:24ㆍAI Paper Review/Deep RL Papers [EN]

<arxiv> https://arxiv.org/abs/2007.14430

0. TD error and bootstrapping in reinforcement learning

Munchen Reinforcement Learning (M-RL) is actually a really simple idea. Bootstrapping is a core idea in reinforcement learning, especially in learning q-functions with a temporal difference error. for example, we don't know the optimal q function at t+1, but the agent could use it as a learning target. we replace the optimal q function with our current estimate q function. this is known as bootstrapping in reinforcement learning.

1. Munchausen Reinforcement Learning(M-RL) framework

This paper argues that q-function is not the only thing that could be used to bootstrap reinforcement learning. let's assume that we have an optimal stochastic policy. then the log policy is 0 for the optimal action and a -infinity for suboptimal ones.

which is a really strong signal, and we can use this for bootstrap reinforcement learning. of course, the optimal policy is unknown. so we replace that with our current policy. isn't it sounds similar compared to the explanation above? that's right. we are using the log policy for bootstrap reinforcement learning.

How so? it's really simple. just replace reward to reward + log policy * alpha in any Temporal-Difference(TD) scheme.

*alpha is a constant to balance reward and log policy, which is set at 0.9 in this paper's atari57 benchmark

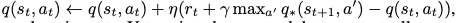

This idea can be extended everywhere that uses TD scheme. This paper applies M-RL framework to popular off-policy reinforcement learning algorithms, which is DQN(Deep Q Network) and IQN(Implicit Quantile Network).

But DQN has a little issue applying M-RL, because DQN computes deterministic policy while M-RL assumes stochastic policy. This can be easily solved by modifying the regression target, to not only maximize return but also the entropy of the resulting policy. sounds familiar? this is just a discrete version of SAC(Soft Actor-Critic), we call it Soft-DQN.

finally, we apply M-RL to Soft DQN. Then, resulting algorithm is called M-DQN(Munchausen Deep Q Network)

This is a really simple modification of DQN, but it's very powerful and efficient. It converges robustly, outperforms state-of-the-art RL algorithms.

Disclaimer:

This is a short review of the paper. it only shows a few essential equations and plots.

If needed, always read the full paper :)

Materials & Previous Works that you need to understand this post:

0. Reinforcement Learning: An introduction (Richard Sutton)

1. Playing Atari With Deep Reinforcement Learning (Minh et al)

2. Implicit Quantile Networks for Distributional Reinforcement Learning (Dabney et al)

3. Soft Actor-Critic: Off-Policy Maximum Entropy Deep Reinforcement Learning with a Stochastic Actor (Haarnoja et al)

4. Reinforcement Learning with Deep Energy-Based Policies (Haarnoja et al)

'AI Paper Review > Deep RL Papers [EN]' 카테고리의 다른 글

| [3줄 RL] SMiRL: Surprise Minimizing Reinforcement Learning in Unstable Environme (0) | 2021.07.02 |

|---|---|

| WHAT MATTERS FOR ON-POLICY DEEP ACTOR-CRITIC METHODS? A LARGE-SCALE STUDY (0) | 2021.06.16 |

| Decision Transformer: Attention is all RL Need? (0) | 2021.06.12 |

| Evolving Reinforcement Learning Algorithms (0) | 2021.06.01 |

| Self-Imitation Advantage Learning (SAIL) (1) | 2021.05.31 |